Over the last few days, I've coordinated setting up a new test environment for ImageRight, now that we've upgraded to version 5. Our previous test environment was still running version 4, which made it all but useless for current workflow development. However, workflow development isn't the only reason to set up an alternate ImageRight system - there are some other cool uses.

ImageRight has an interesting back-end architecture. While it's highly dependant on Active Directory for authentication (if you use the integrated log on method), the information about what other servers the application server and the client software should interact with is completely controlled with database entries and XML setup files. Because of this you can have different ImageRight application servers, databases and image stores all on the same network with no conflicts or sharing of information. Yet, you don't need to provide a separate Active Directory infrastructure or network subnet.

While our ultimate goal was to provide a test/dev platform for our workflow designer, we also used this exercise as an opportunity to run a "mini" disaster recovery test so I could update our recovery documentation related to this system.

To set up a test environment, you'll need at least one server to hold all your ImageRight bits and pieces - the application server service, the database and the images themselves. For testing, we don't have enough storage available to restore our complete set of images, so we only copied a subset. Our database was a complete restoration, so test users will see a message about the system being unable to locate documents that weren't copied over.

I recommend referring to both the "ImageRight Version 5 Installation Guide" and the "Create a Test Environment" documents available on the Vertafore website for ImageRight clients. The installation guide will give you all the perquisites need to run ImageRight and the document on test environments has details of what XML files need to be edited to ensure that your test server is properly isolated from your production environment. Once you've restored your database, image stores and install share (aka "Imagewrt$), its quick and easy to tweak the XML files and get ImageRight up and running.

For our disaster recovery preparations, I updated our overall information about ImageRight, our step-by-step guide for recovery and burned a copy of our install share to a DVD so it can be included in our off-site DR kit. While you can download a copy of the official ImageRight ISO, I prefer to keep a copy of our expanded "Imagewrt$" share instead - especially since we've added hotfixes to the version we are running, which could differ from the current ISO available online from Vertafore.

Because setting up the test enviroment was so easy, I could also see a use where some companies may want to use alternate ImageRight environments for extra sensitive documents, like payroll or HR. I can't speak for the additional licensing costs of having a second ImageRight setup specificially for production, but it's certainly technicially possible if using different permissions on drawers and documents doesn't meet the business requirements for some departments.

Monday, November 29, 2010

Monday, November 22, 2010

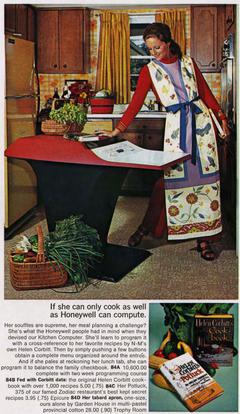

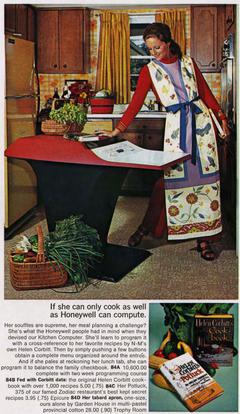

Will Computers Ever Become a Key Kitchen Tool?

With the holidays fast approaching, I can't help but be paying a little more attention to what's been going on in my kitchen, where mostly low-tech options reign. I enjoy cooking and with that comes my very basic way of organizing recipes - in binders, sorted by category. It's simple and it works for me.

I have a couple cookbooks on my Kindle and while I'm really happy with the Kindle for regular reading, it just hasn't made its way into the kitchen as a viable way to store and access recipes. I don't want to get it messy during food preparation and I want to be able to move it around and view it from various places while I'm working on a meal. A recipe card or magazine clipping in a small plastic sleeve always seems to work for me - I can tape it to a cabinet, slide it around on the counter and can wipe it off if I get greasy prints on it. Plus, the screen saver never kicks on.

and while I'm really happy with the Kindle for regular reading, it just hasn't made its way into the kitchen as a viable way to store and access recipes. I don't want to get it messy during food preparation and I want to be able to move it around and view it from various places while I'm working on a meal. A recipe card or magazine clipping in a small plastic sleeve always seems to work for me - I can tape it to a cabinet, slide it around on the counter and can wipe it off if I get greasy prints on it. Plus, the screen saver never kicks on.

Still, the desire to bring computing to the kitchen has never been far from the minds of people who work with technology. Starting in 1969, Honeywell introduced the first "kitchen computer", the H316 pedestal model, offered by Nieman Marcus.

Still, the desire to bring computing to the kitchen has never been far from the minds of people who work with technology. Starting in 1969, Honeywell introduced the first "kitchen computer", the H316 pedestal model, offered by Nieman Marcus.

The need to take a two week programming course and the ability to be able to read the binary display was probably a few of the reasons there is no record of one ever being sold.

Still, ideas for computing in the kitchen still rise to the surface. Check out this article in the San Francisco Chronicle today, covering the ideas of a smart countertop, where cameras and a computer work together to identify food items and suggest recipe ideas that use the ingredients available.

Maybe this will entice my husband to don an apron and practice his knife skills. Or not.

I have a couple cookbooks on my Kindle

Still, the desire to bring computing to the kitchen has never been far from the minds of people who work with technology. Starting in 1969, Honeywell introduced the first "kitchen computer", the H316 pedestal model, offered by Nieman Marcus.

Still, the desire to bring computing to the kitchen has never been far from the minds of people who work with technology. Starting in 1969, Honeywell introduced the first "kitchen computer", the H316 pedestal model, offered by Nieman Marcus.Still, ideas for computing in the kitchen still rise to the surface. Check out this article in the San Francisco Chronicle today, covering the ideas of a smart countertop, where cameras and a computer work together to identify food items and suggest recipe ideas that use the ingredients available.

Maybe this will entice my husband to don an apron and practice his knife skills. Or not.

Wednesday, November 17, 2010

OfficeScan 10.5 - Installed, with some Oddities

I finally upgraded our office antivirus software to the lastest and greatest version from TrendMicro. This has been on my list since spring time, and well, you know how those things go. Because the server that was hosting our exisiting version is aging rapidly, I opted to install the new version on a new, virtual installation of a Windows 2008.

The installation went smoothly and lined up well with the installation guide instructions. Once that was running, I was easily able to move workstations and servers to the new service using the console from the OfficeScan 8 installation. Our OfficeScan 8 deployment had the built-in firewall feature enabled, which I opted to disable for OfficeScan 10. Because of this, the client machines were briefly disconnected from the network during the reconfiguration and this information lead me to wait until after hours to move any of our servers that were being protected to avoid loosing connectivity during the work day.

Keep in mind that OfficeScan 10.5 does not support any legacy versions of Windows, so a Windows 2000 Server that is still being used here had to retain its OfficeScan 8 installation, which I configured for "roaming" via some registry changes. This allows it to get updates from the Internet instead of the local OfficeScan 8 server. Once that was done, I was able to stop the OfficeScan 8 service.

Some other little quirky things:

Next, I'll probably see that the client installation gets packaged up as an MSI, so we can have that set to automatically deploy using group policy.

The installation went smoothly and lined up well with the installation guide instructions. Once that was running, I was easily able to move workstations and servers to the new service using the console from the OfficeScan 8 installation. Our OfficeScan 8 deployment had the built-in firewall feature enabled, which I opted to disable for OfficeScan 10. Because of this, the client machines were briefly disconnected from the network during the reconfiguration and this information lead me to wait until after hours to move any of our servers that were being protected to avoid loosing connectivity during the work day.

Keep in mind that OfficeScan 10.5 does not support any legacy versions of Windows, so a Windows 2000 Server that is still being used here had to retain its OfficeScan 8 installation, which I configured for "roaming" via some registry changes. This allows it to get updates from the Internet instead of the local OfficeScan 8 server. Once that was done, I was able to stop the OfficeScan 8 service.

Some other little quirky things:

- You can't use the remote install (push) feature from the server console on computers running any type of Home Edition of Windows. I also has a problem installing on a Windows 7 machine, so I opted for doing the web-based manual installation. Check out this esupport document from Trend that explains the reason - Remote install on Windows 7 fails even with Admin Account.

- I wanted to run the Vulnerabilty Scanner to search my network via IP address range for any unprotected computers. However the documentation stated that scanning by range only supports a class B address range, where my office is using a class C range. I couldn't believe that could actually be true, but after letting the scanner run a bit with my range specified and no results, I guess it is.

Next, I'll probably see that the client installation gets packaged up as an MSI, so we can have that set to automatically deploy using group policy.

Friday, November 12, 2010

IPv6: Yes, My Head is in the Sand

There has been a fair amount of chatter about the depleting IPv4 address space how the adoption of IPv6 is looming. If you haven't seen it, check out the post at Howfunky.com on "The Ostrich Effect". Of particular interest is how a lot of network and system administrators are ignoring IPv6 all together, and I admit I’m one of them. My head is firmly entrenched in the sand. While it might not be the best approach, I’ll explain why I am where I am.

First, I’m not going to tell you that I don’t think IPv6 will stick. It will. Also, I find it pretty interesting and would love to be able to meet it head on when the time comes to make the transition. But here’s the issue – I don’t see the pressing need right this moment for the infrastructure I work with and I have other projects that need my time and attention first. IPv6 just isn’t an emergency.

For the enterprise that I manage, our public facing Internet presence is very small. I have two /28 ranges assigned and I’m barely using half of those addresses as it is. I predict that I won’t need any additional addresses anytime soon. Internally, we are privately addressed and we have several legacy applications that will never be rewritten or patched to support IPv6.

Of course, I know that some of our newer servers and workstations are automatically establishing IPv6 addresses for themselves and we should be utilizing that by embracing the dual stack technology that’s built into our newer Windows machines. If nothing else, I should have a better handle on what going on automatically when those machines connect to our network.

I also know that at some point we’ll need external IPv6 addresses on the ‘net, so others who are using the protocol can access our mail and web servers. I’m sort of hoping that our ISP will contact me one day and say “Here! These IPv6 addresses are for you and this is what you do with them!"

Wishful thinking I know. But right now, that’s all I can afford.

Tuesday, November 9, 2010

The Post-Mortem of a Domain Death

The past few days have been busy as we've been performing the tasks to remove our failed domain controller and domain from our Windows 2003 Active Directory forest. Now that everything is working normally and I can check off that long-standing IT project of "remove child domain" from my task list, I'd like to share a few things we've learned.

We found that a combination of the WINS resolution and the orphaned trust relationship distracted the application enough that it was slow to operate, sometimes refused to load at all, and hung on particular actions. If you happen to be an ImageRight customer who uses the Active Directory integration features, keep in mind that it likes all the AD ducks to be in a row.

While we had a little a bit of pain getting to this point, I'm really happy that our AD forest is neater and cleaner because of it. It'll make it much easier to tackle other upgrade projects on the horizon for Active Directory and Exchange.

- NTDSUTIL will prompt you several times when it comes to removing the last DC in a domain using the steps in KB 216498. It will even hint that since you are removing the DC in the domain, that you are also removing the domain itself. But you are not. You must take additional steps in NTDSUTIL to remove the orphaned domain, see KB 230306 to finish up.

- How do you know you have an orphaned domain? Check AD Domains and Trusts. If you still see a domain in your tree that you can't view the properties of, you aren't done yet. Also, if your workstations still show the domain as a logon option in the GINA, get back to work.

- You might remember to clean up your DNS, but don't forget to also clean up WINS. WINS resolution can haunt you and keep your workstations and applications busy looking for something that isn't there anymore.

- Watch your Group Policy links. If you've cross-linked policies from the child domain to your forest root or other domains, workstations will indicate USERENV errors referencing the missing domain. Policies from other domains won't show up in your "Group Policy Objects" container the GPMC. You'll need to expand all your other OUs in the GPMC to find any policy links that report an error.

- If you are using a version of Exchange that has the infamous Recipient Update Service, remove the service entry that handles the missing domain. You'll see repeated MSExchangeAL Events 8213, 8250, 8260 and 8026 on your mail server otherwise.

We found that a combination of the WINS resolution and the orphaned trust relationship distracted the application enough that it was slow to operate, sometimes refused to load at all, and hung on particular actions. If you happen to be an ImageRight customer who uses the Active Directory integration features, keep in mind that it likes all the AD ducks to be in a row.

While we had a little a bit of pain getting to this point, I'm really happy that our AD forest is neater and cleaner because of it. It'll make it much easier to tackle other upgrade projects on the horizon for Active Directory and Exchange.

Friday, November 5, 2010

Don’t Miss Out on gogoNET Live! Videos

On Wednesday, I had the pleasure of doing the post-presentation interviews for the speakers at the gogoNET Live! IPv6 Conference. These short little chats should be posted at www.gogonetlive.com in the next few days and will give you a taste of what each presentation included and some tips for implementing IPv6. Hopefully I’ll have some time in the next few weeks to listen to some of the full presentations (soon to be available as well), so here are few that will be on my list.

- Bob Hiden, Check Point Fellow and Co-inventor of IPv6 - his presentation on why IPv6 was invented will give anyone a good overview of why IPv6 is a necessary move for anyone who uses or supports activities on the Internet.

- Elise Gerich, Vice President of IANA and John Curran, President and CEO of ARIN – both spoke about the various aspects of the anatomy of IPv4 address depletion. I’ve always been fascinated by the DNS and IP address infrastructures that make the internet work and you can’t get any closer to the source that with these industry executives.

- Silvia Hagan, CEO of Sunny Connection – Silvia’s presentation was on how to convince your boss to make the move to IPv6. She’s also the author of the O’Reilly book on IPv6, so trust her ideas are good ones.

- Jeremy Duncan, the Senior Director of IPv6 Network Services at Command Information – Jeremy focused on how to set up and get the most out of your test/lab network. We all will have to start somewhere when it comes to learning about IPv6 and some good tips on getting your lab of the ground will go a long way.

- Joe Klein, the Cyber Security Principal Architect at QinetiQ – IPv6 has many security features built right in. Be sure to check out what Joe has to say about the features, changes and possibilities once IPv6 is well established.

Tuesday, November 2, 2010

Virtualized Domain Controllers? I’ll pass, thanks.

There are a lot of good arguments for virtualizing DCs. You should have several of them for redundancy, but depending on the number of employees and general work load, DCs tend to be underutilized and it can be hard to warrant having a whole physical server for each one. But after loosing a second domain controller after doing essentially some basic VM maintenance, I’m not sold.

You may remember a previous post of mine from the summer of 2009 about NTDS Error 2103, when the DCs in a small child domain were virtualized. I had agreed to virtualize both DCs from that domain as the domain was not supporting any user accounts and had less than a half dozen servers as members. One did not convert well and we decided to just leave the remaining DC as the sole one standing for that domain after vetting out the risks. There are several “rules” to follow when virtualizing DCs, particularly not restoring snapshots of them and not putting yourself in the situation where your VM host machine need to authenticate to DCs that can’t start up until your host authenticates.

Fast forward about 16 months, to now. Our system administrator who handles the majority of our ESX management was migrating many of our VMs to our newly installed SAN. He reported that he shut down the DC normally, moved the VM and then started it back up a few hours later after all the server files had been copied over. The few servers that use that DC were working properly and everything looked good.

But alas, a few weeks later, the server reported a USN rollback condition. Replication and netlogon services stopped. I checked the logs to see if I could figure out the cause, but only saw things that added to the confusion. The DC was mysteriously missing logs from between the time of the VM relocation and the time of the NTDS error. And the forest domain controllers had logs indicating it had been silent for nearly 2 weeks. At this point, I can only speculate what went bad.

We slapped a bandage on the server by restarting netlogon so those few servers could authenticate, but without replication happening properly, the server will simply choke up again. And after the tombstone lifetime passes, the forest domain will consider it a lost cause. It’s essentially a zombie.

So begins our finally steps to decommission that child domain. I have no interest in restoring that domain from backup, since removing that domain has been an operations project that has been bumped for a long time. Now our hand has been forced and the plan is simple. Change a couple service accounts, move 2 servers to join the forest root domain and then NTDSUTIL that DC into nothingness.

As for our two forest root domain controllers? I’ll throw my body in front of their metal cases for a long time to come.

Subscribe to:

Comments (Atom)

-small.jpg)